Vibe Responsibly

Vibe coding is like a really sharp knife.

Vibe coding “is claimed by its advocates to allow even amateur programmers to produce software (…)”.1 This is neither a new concept, nor even a new claim. No-code and low-code solutions have been making it for decades. Geocities was launched in 1994. QuickBase in 1999. WordPress in 2003 - and today it powers 43.6% of all websites.

If the claim isn’t new, then what is?

The Unprecedented Freedom of Vibe Coding

WordPress remains stifling, perhaps more so than Geocities ever was. It wants to operate under a specific mental model of a homepage with either posts or subpages. It’s marvelously extensible - but that extensibility only helps if someone already built what you want. Otherwise you’ll either need to piece what you need yourself, or to contract a developer.

Sometimes “contract a developer” is spoken with the same emphasis as “contract a disease”. Perhaps that’s because developers could - and did - demand a hefty premium during the Golden Age of software development. Perhaps because software development is not always well understood, and the expectations of dollar cost to value delivered can sometimes be a little outlandish.

Both sides were frustrated. No-code and low-code services - limiting as they are - flourished. Against this backdrop it’s easy to assume that it’d be the business users who would jump into vibe coding. In reality it’s prevalent on both sides of the tech/business divide.

I’m an experienced developer - and a vibe coder.

Enjoying the vibes? Please subscribe if you feel like it.

From Oversight to Collaboration

I remember trying out the GitHub Copilot technical preview about four years ago. It wasn’t very good. It simply would not stop hallucinating in constructs from a certain popular framework the codebase didn’t use. But it was impressive in that it got as much right as it did.

Copilot improved enough to be usable rather quickly. It had a limited view of the codebase and still hallucinated a lot but the next-line predictions were more useful than harmful. When we adopted it, improved the velocity of admittedly already fantastic developers. Not by 10x, but appreciably. Even so, it couldn’t be used by a non-technical user to implement a feature, only suggesting a few lines at a time.

A few months ago I tried out Cursor. I replaced Copilot with it after a brief testing period, but thought of it as the same features, done better.

Cursor’s completion felt much faster. It grasped the codebase better. I find it deliciously ironic that while Copilot was stuck at where my cursor was, Cursor would happily flit all over the file during a refactor.

It felt much less like keeping an eye out for Copilot’s hallucinations, and more like genuine collaboration. The statistical model behind Cursor was uncannily good at picking up where the user was going and adapting to help with that in seconds.

But I still didn’t get it - not yet. I wasn’t vibing.

From Collaboration to Reviews Vibes

Users of Cursor will note I haven’t said a word about the AI Pane of the editor. For the longest time I dismissed it as a gimmick. It was more convenient than pasting code into a prompt yourself and then copying results back out. I used it a few times, but it never merged into my workflow quite the way AI completion did.

That changed when Agent Mode released a while back. The models Cursor provides got the ability to search the codebase, run commands, and create or modify multiple files.

It’s an incredibly powerful tool.

Agent Mode became a part of my workflow and the characteristics of the cognitive load shifted once again. Previously I would spend time ideating the solution, then implement it myself - taking advantage of the completions as I went.

The ideation step remained identical. The next step however changed massively. Now I can dump the raw idea for the solution into the agent box and let it run. There’s a good chance that it would do 75-90% of the implementation in a couple minutes.

This shifted my interaction model from largely hand-written to largely generated. Of course I would thoroughly check the changes the model proposed. It always needs adjustments, better error handling, adding tests beyond happy-path testing. But my role in the process changed. I was hooked. I was vibing.

I could afford to spend a lot more time thinking through a complete solution before the first prompt. I spent a lot more time improving and understanding halfway decent code than writing it, too. It helped in surprising ways. For example I no longer experience proximity myopia, where I would inadvertently paint myself into a corner.

Crucially, I have the knowledge to review and correct the code produced by a model. Most of the time it’s decent - not great. And even if it was great, I would still spend time reading it because putting something I don’t understand in production is a recipe for trouble. I also know which features absolutely, positively need to be done by hand because AI won’t cut it.

But what if the prompter doesn’t review the LLM’s output - or indeed if they cannot?

Vibes Gone Wrong

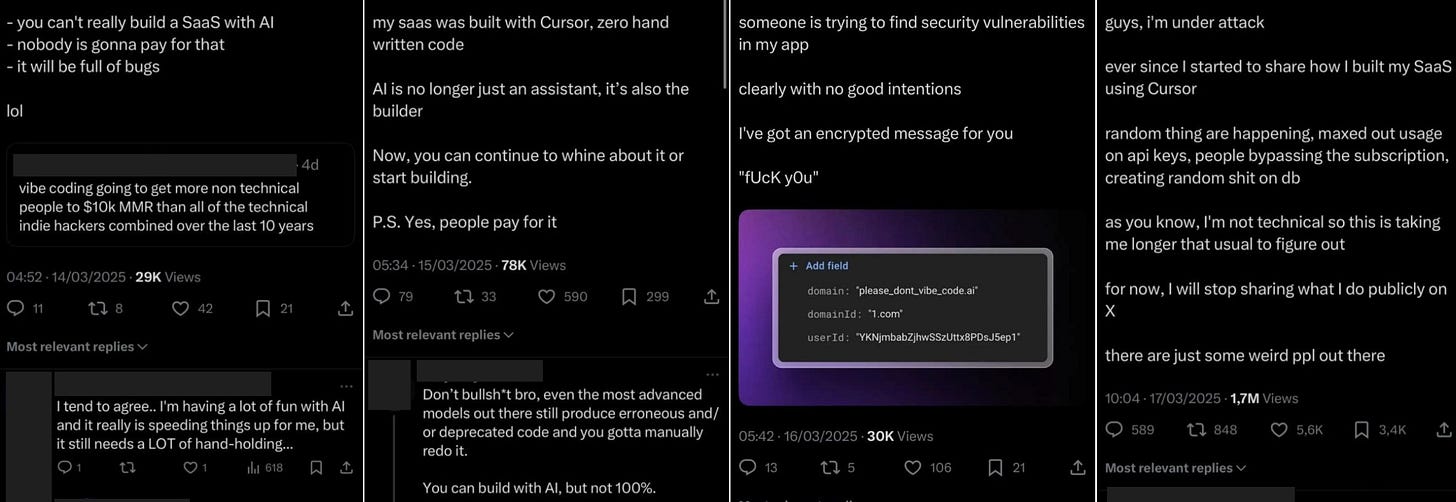

There’s a semi-famous series of four tweets making rounds on the web. I’ll present them heavily anonymized here. To make it clear: seeking out this individual and giving them a hard time would be wrong. Don’t do it.

On the one hand my heart goes out to the poster, whom we’re going to call Dave. Security vulnerabilities in production are never fun. Having your business actively exploited is terrifying.

Dave sharing that his product was built entirely with AI, while also showing that he didn’t necessarily have the knowledge necessary to secure it, was not a good move for his operational security. It wouldn’t be even without Dave’s confrontational language. Giving up information about attack surfaces is never the play, whether wrapped in a boast or not.

Intrusion without intent to responsibly disclose vulnerabilities is a black-hat move. I do not condone black-hatting. I could question whether Dave would accept responsible disclosure in private with as much grace as he communicates publicly, but that’s neither here nor there.

Unreviewed or poorly understood code ending up in production is a nightmare in case something goes wrong. The code that went to production in this case was, at best, unreviewed.

Dave’s Tale

Let’s examine what options were at Dave’s disposal.

He could have gotten a software engineer to do a freelance audit of his codebase. If it’s not a very complex codebase - most MVPs aren’t - it might take maybe 8-10 billable hours. Because software engineering market is in a downturn, this wouldn’t have been that expensive. The Golden Age is over, prices are way down.

Armed with the audit results Dave could have attempted to vibe code solutions, or hand it over to a contract developer for fixes. There are plenty of developers who would balk at fixing up “AI code”, but there definitely are some who are already doing it day in and day out.

These options certainly cost more than a $20 Cursor subscription. It’s easy to understand why Dave didn’t think he needed the expense, either. LLMs are great at being confidently wrong. I’m certain whichever model Dave used, it would have told him there are no security issues with its implementation if asked.

You might dismiss my proposed solutions. “Oh sure, the software engineer’s proposed solution to a problem is to hire a software engineer!” Well, yes. Until these tools immeasurably improve, you’ll need a human - equipped with the requisite knowledge - in the loop.

Vibe And Let Vibe

AI agents like these built into Cursor or Windsurf, in-terminal agents like Claude Code and Codex, or full “AI developers” like Devin are a reality. These incredibly powerful tools are available and in use today - both by technical users, and very non-technical ones.

I don’t think the companies selling them do a good job of explaining that this is experimental technology. They rush to market to capture the AI boom. In reality, at the moment these tools are like really sharp knives. Useful, and hard to hurt yourself with if you know how to handle them (though not impossible). But if you don’t…

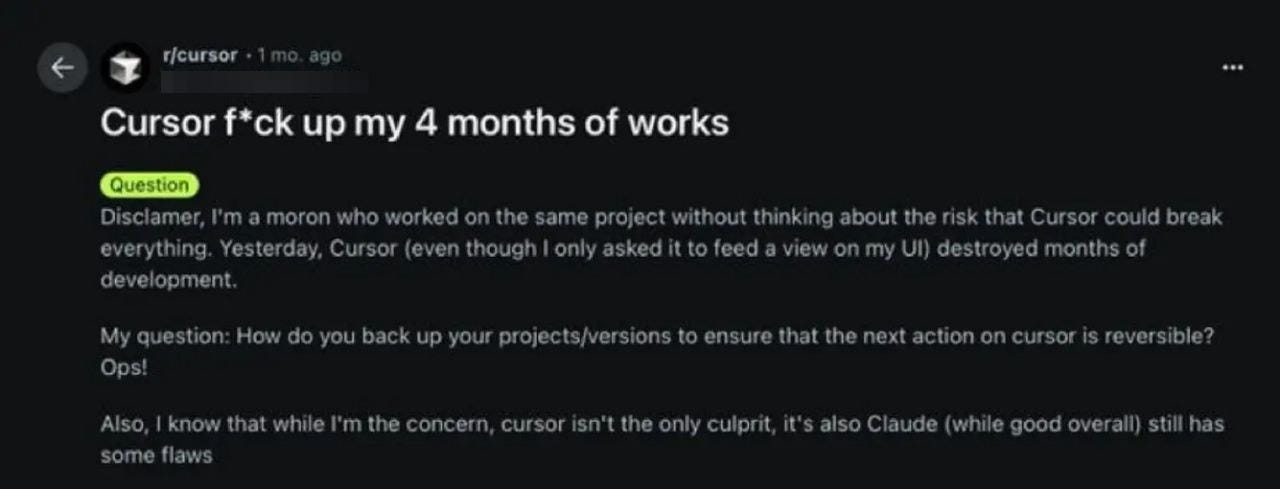

This poster was met with mostly sneers from an audience of - ostensibly - software engineers. Perhaps there were a few offering helpful suggestions down there, teaching the poster about version control or advising them to start as simple as taking backups. I certainly couldn’t find them in the avalanche of negativity. I would dismiss some as “the Reddit curse”, but the sheer volume saddened me.

My fellow software engineers: this is not helpful. This is gatekeeping at its lowest. If we are truly so insecure that we must put down someone taking their first shaky steps in software development, perhaps we deserve to be replaced by AI. More software is good; let’s help make sure it’s good software.

And vibe-coding founders: keep vibing, but vibe responsibly. Get a programmer to take a look at what Claude or ChatGPT made. Save yourself the headache and cost of dealing with breaches later down the line. I promise we’re not all greedy snobs - I don’t even believe most of us are.

Just… maybe don’t be like Dave.